Featured 2

AI Center Hotspots, Source: Oxford University AI Centre

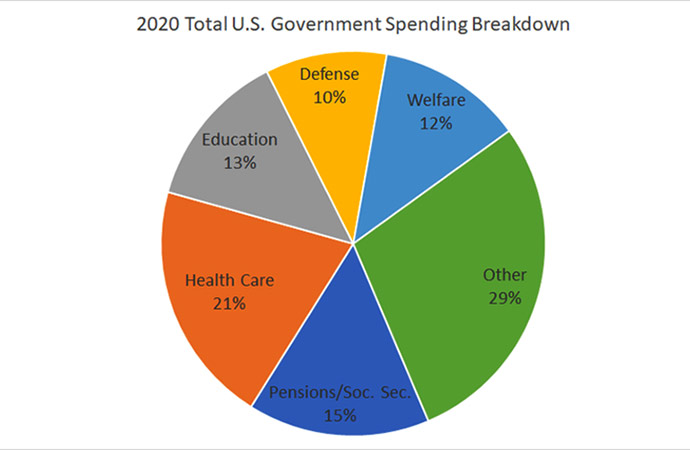

A new form of supremacy is imminent in the AI bubble, one measured not in oil barrels or military might, but in GPUs and center capacity. The world is grimly split between the compute-rich and compute-poor, where only 32 countries possess the heavyweight data infrastructure needed to train frontier AI models. The rest of the planet are relegated to digital spectators in humanity's most exponential technological uptick. For now and the coming ages, AI appetite remains galvanising geo relations, while galavanting the planet for new biomes to fry.

Compute Power Is the New Oil

A sobering picture of digital colonialism is in action as U.S. tech giants command 87 of the world's elite AI hubs, representing nearly two thirds of global capacity. China follows with 39 facilities, while currently Europe scrapes by with a mere 6. Africa and South America remain virtually absent from this computational aristocracy, creating what Oxford researchers have termed the "Compute Desert".

A new collaboration between OpenAI, SoftBank, and Oracle in Texas will sprawl larger than Central Park, costing $60 billion and requiring its own natural gas plant. Meanwhile, Meta commits up to $65 billion, Google pledges $100 billion, and Amazon projects over $75 billion. All in pursuit of global compute dominance.

The GPU fever driving this divide centers on Nvidia's H100 processors, which have become the "new black gold" of the AI age. Limited supply means nations without deep pockets wait at the back of the queue, creating a silicon apartheid that extends far beyond hardware.

As language bias favors English and Chinese populations, it also enables the marginalizing of Swahili speakers. "Countries without compute power risk becoming digital colonies, forced to rent brains and servers from abroad," warns a comprehensive analyst of HROne in China.

Talent drain sees top researchers from Kenya or Argentina hop continents just to access GPU clusters, and startup ceilings force entrepreneurs in LDCs to rent compute overseas, facing higher latency, steeper bills, and foreign law headaches, as reported by Semianalysis.

Efforts to bridge this chasm remain woefully inadequate.

Brazil's $4 billion AI push and the EU's €200 billion sovereignty fund aim to build local clouds, but their chips still ship from California. Africa's Cassava is opening a flagship GPU farm, yet it will satisfy perhaps only 10-20% of regional demand, resulting in the "Compute South" remaining locked out, with no olive branch of consequence extended.

Carbon Expenditure of Silicon Intelligence

The server farms powering the revolution, and the promise of artificial general intelligence comes with an energy appetite that has already derailed global climate commitments.

A single ChatGPT query consumes approximately 10 times more electricity than a traditional Google search, equivalent to powering a light bulb for 20 minutes. With millions of daily users, this seemingly trivial difference compounds into something more sinisterly voracious. Google's sustainability report revealed that the company's GHG emissions surged 48% since 2019, with data center energy consumption and AI supply chain emissions at the helm.

NPR reports that training a large language model like GPT-3 consumes 1,287 megawatt hours of electricity, generating approximately 552 tons of carbon dioxide. Unlike traditional computing, generative AI workloads create rapid fluctuations in energy use during different training phases, forcing power grid operators to employ diesel based generators to absorb these spikes, reports MIT.

Energy consumption could approach 1,050 terawatt-hours by 2026, which would position data centers as the fifth-largest electricity consumer globally, between Japan and Russia. And by 2030, demand from these centers is projected to increase by 15-20% annually.

For each kilowatt hour of energy consumed, data centers require approximately two liters of water for cooling. But perhaps most concerning is the trajectory ahead. Morgan Stanley, assuaged by UN's International Telecommunication Union reports, predicts that by 2030, data centers will emit triple the amount of CO2 annually compared to scenarios without the AI boom-2.5 billion tonnes, equivalent to roughly 40% of current U.S. annual emissions.

Sensing the Carbon

Paradoxically, the tech straining the planet's energy resources is simultaneously emerging as one of our most powerful tools for environmental monitoring and conservation, akin to hiring a pyromaniac as the fire chief, terrifying, yet oddly effective.

The scope of AI's environmental applications has expanded dramatically. Machine learning algorithms now analyze vast datasets to generate climate predictions, process satellite imagery to track deforestation in real time, and identify species with unprecedented precision.

Cambridge University's neuromorphic edge AI systems deploy brain inspired architectures that consume dramatically less energy while maintaining high computational throughput. Meanwhile, WWF's ManglarIA project in Mexico uses sensor networks including weather stations, carbon flux towers, and camera traps to monitor mangrove ecosystems.

"Environmentally geared AI analyzing geospatial data from our sensor network allows us to develop more effective interventions before these critical carbon sinks are irreversibly damaged," explains David Thau, Global Data and Technology Lead Scientist at WWF-US. Carbon emissions monitoring combines satellite imagery from sources like Sentinel-5 with graph neural networks to automatically detect emissions from power plants and manufacturing facilities.

The International Energy Agency estimates that adopting existing AI applications across energy, industry, transport, and buildings could reduce emissions by approximately 1.4 gigatons of CO2 annually.

Computer vision algorithms identify species from camera trap footage, while deep learning models count marine life on sea floors, measuring offshore wind farm impacts. AI satellite monitoring in the Amazon is helping with detecting deforestation and flagging illegal mining operations.

"AI is changing the way the world works, for better or worse. This is one way it could help us," notes Laura Pollock of McGill University. "Protecting biodiversity is crucial because ecosystems sustain human life. No pressure, Silicon Valley".

Shoumik Zubyer is a freelance writer.

Leave a Comment

Recent Posts

Religion and Politics: A Toxic ...

At Dhaka University, cafeteria workers have been told not to wear shor ...

Enayetullah Khan joins AsiaNet ...

AsiaNet’s annual board meeting and forum was held in Singapore, ...

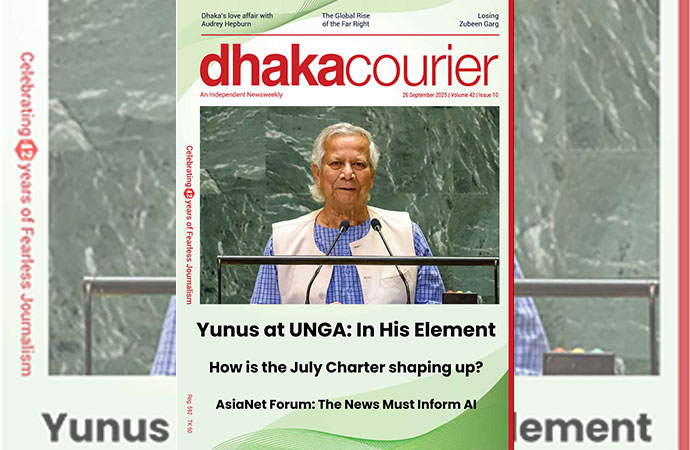

In a New York minute

Many leaders back a UN call to address challenges to ..

Defaulted loans at Non-Bank Financial Institutions ( ..

How the late Zubeen Garg embodied cultural affinitie ..